Innovation has begun in the AI voice recognition market. Last May, NVIDIA unveiled the next-generation open-source voice recognition model parakeet-tdt-0.6b-v2 that threatens Whisper. Optimized for GPUs, this model strongly demonstrates its commercial potential in real-time voice processing, automatic transcription, audio transcription, AI voice recognition APIs, and advertisement revenue-based content creation, shaking up the AI voice recognition market.

FastConformer + TDT Based High-Speed Voice Recognition Engine

parakeet-tdt-0.6b-v2 is a model that recognizes only English and has about 600 million detailed components (parameters). This model consists of the following two technological architectures:

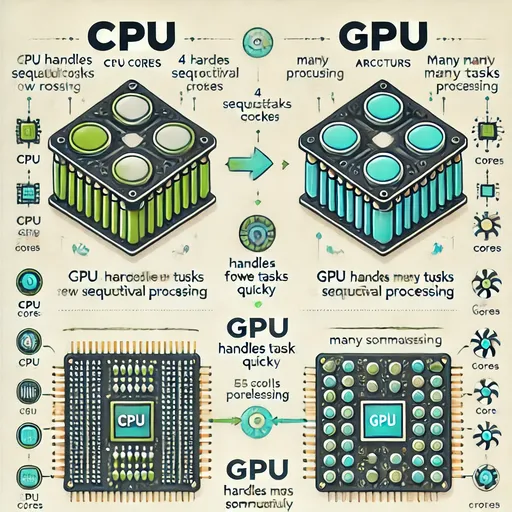

- FastConformer Encoder: Designed to effectively analyze the characteristics of speech and to operate very quickly on NVIDIA graphics cards (GPUs). This allows for faster and more accurate processing of complex voices.

- TDT Decoder (Transducer-Decoder Transformer): A structure that excels in converting sound into text and can handle long audio files stably and without interruptions.

Thanks to these two structures, the model remains optimized for quickly and accurately converting voice to text in real time, regardless of how long the audio is.

Speed Thousands of Times Faster than Whisper, Ideal for Commercial Services

According to official benchmarks:

- RTFx (Real Time Factor): 3386.02 (based on batch 128)

- WER (Word Error Rate): 6.05%

In comparison, while Whisper-large-v3 has an average RTFx of 2 to 5, Parakeet boasts a speed thousands of times faster.

Results from my own tests were even more impressive. Instead of using a clean audio file exclusively for speech, I tested with a 3 minute and 40 second song that included background sound. The time taken for complete transcription was merely 13 seconds. Within this incredible result were elements such as:

- Despite background noise, timestamps were accurately mapped

- Punctuation and capitalization automation were nearly perfect

- Transcribing the word ‘you’ as ‘ya’, vividly reflecting the actual speaker’s intonation and expressions

However, when testing with Japanese or Korean audio, no output was returned. It was clear that it was an English-only ASR model.

Comparison of AI Voice Recognition Engines: NVIDIA Parakeet vs OpenAI Whisper

| Item | NVIDIA Parakeet | OpenAI Whisper |

| Language Support | English Only | Multilingual (over 98 languages) |

| Model Architecture | FastConformer + TDT | Convolution + Transformer |

| Speed (RTFx) | Over 3000 | 2 to 5 |

| License | Open Source (Commercial Use) | Open Source (Commercial Use) |

| Audio Robustness | Strong in Audio with Music Included | Relatively Weak |

| Multimodal Integration | None | Possible Integration with GPT |

While Whisper has the upper hand in multilingual processing, Parakeet is overwhelming in real-time voice processing and accuracy.

Considerations for Commercial Use

- Unsupported for languages other than English (no response in Korean/Japanese tests)

- May be more sensitive in high noise environments compared to Whisper

- Does not support multimodal analysis (e.g., semantic interpretation)

However, there are sufficient expectation factors:

- Potential for multilingual fine-tuning using platforms like Common Voice

- High practical utilization in automatic transcription of meeting notes, court records, interviews, etc.

- Easy to introduce as a substitute backend for services based on Whisper

- Suitable as an engine for providing AI voice recognition API services

Technical Innovation: Real-Time Processing Structure Based on CTC

The core of how Parakeet maximized its speed lies in the CTC (Connectionist Temporal Classification) methodology. Speech data is long and complex, and each word is uttered at different times. Accurately transcribing this in the order that a person speaks isn’t as easy as it sounds.

CTC is a technology that extracts only important information from such complex audio streams and automatically aligns and matches where each letter is located. Moreover, CTC can process several pieces simultaneously, making it very fast.

Thanks to this, Parakeet can accept voice input in real-time and output it character by character and word by word without delay, maintaining a consistent speed regardless of audio length. One could think of it as a real-time transcriber that is much faster than traditional methods.

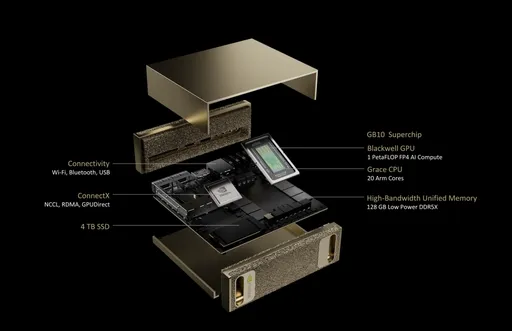

NeMo + Hugging Face: Unified AI Ecosystem Strategy

The Parakeet model strengthens NVIDIA's AI ecosystem strategy through the following integrated structure:

- Complete integration with the NeMo toolkit

- Pipeline available for immediate use on Hugging Face

- Hardware demand driven by GPU optimized models

This is not just about the model, but a strategic move promoting itself as the “fastest working open-source AI model on NVIDIA hardware”.

Conclusion: A New Weapon for AI Transcription Service Developers

Whisper remains powerful. However, it is no longer the only solution.

NVIDIA Parakeet:

- Is an open-source model that can be used commercially

- Is an AI voice recognition engine optimized for real-time transcription

- Can easily integrate into various practical applications like automatic note-taking, audio content transcription, and advertisement revenue-based content creation

- Is ideal for operating AI voice recognition content related to high-paying Google AdSense keywords.

For developers and service planners considering real-time voice processing, AI audio transcription services, and GPU optimized voice recognition systems, NVIDIA Parakeet will be astrategic alternative that combines speed, quality, and commercial viability.

There are no comments.