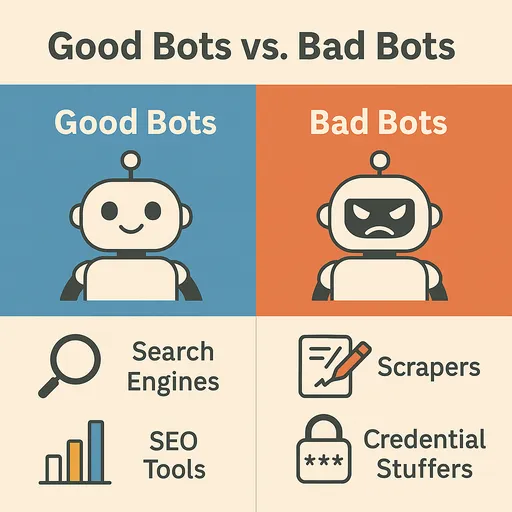

The internet is a vast and ever-evolving sea of information. At the forefront of exploring and collecting this immense data are web crawlers, commonly referred to as bots, which are automated programs. These bots traverse the web, indexing information, collecting data, and providing a variety of services, making them important components of the web ecosystem. However, not all bots are beneficial. There are harmful bots that can cause damage to websites or be misused.

In this article, we will explore the types and characteristics of well-known bots commonly encountered on the web, how to distinguish between beneficial and harmful bots, and effective methods specifically designed to protect your website from harmful bots.

Famous Bots Commonly Seen on the Web (Beneficial Bots)

Most web traffic is generated by bots rather than humans. Among them, the most important and beneficial bots include:

1. Search Engine Bots

These are the most common and important bots. They explore web pages to collect content and add it to search engine indexes, helping users find information.

-

Googlebot (Google): This is Google's primary web crawler. It explores nearly all pages on the web to update search results and provides data to Google services (like Maps and News). It's designated in the

User-AgentasGooglebotorMediapartners-Google. -

Bingbot (Microsoft Bing): This is Microsoft's crawler for the Bing search engine, indicated in the

User-AgentasBingbot. -

Baidu Spider (Baidu): This is the crawler for Baidu, China's leading search engine. If targeting the Chinese market, visits from Baidu Spider are significant. It appears in the

User-AgentasBaiduspider. -

Yandex Bot (Yandex): This is the crawler from Yandex, a major search engine in Russia. It's shown in the

User-AgentasYandexBot. -

Yeti (Naver): This is Naver's primary search engine crawler in South Korea. It specializes in collecting search results tailored to the Korean market, and appears as

User-AgentYeti.

2. Social Media Bots

These are used to generate previews (titles, descriptions, images) for links shared on social media.

-

Facebook External Hit (Facebook): This grabs page information when links are shared on Facebook. It’s identified by the

User-Agentfacebookexternalhit. -

Twitterbot (Twitter/X): This generates link previews on Twitter (X). It appears as

User-AgentTwitterbot. -

Slackbot (Slack): This creates previews when links are shared in Slack. It’s shown in the

User-AgentSlackbot.

3. Monitoring/Analytics Bots

These are used to monitor the status, performance, and security vulnerabilities of websites, or to analyze traffic.

-

UptimeRobot, Pingdom: These monitor the uptime of websites and send notifications when they go down.

-

Site Crawlers (Screaming Frog, Ahrefsbot, SemrushBot, etc.): SEO tools analyze websites to find SEO improvements or collect competitive analysis data. The

User-Agentcontains the respective tool's name. -

Ahrefsbot (Ahrefs): Ahrefs is one of the most powerful SEO analysis crawlers mentioned earlier, while Ahrefsbot is one of the most active commercial crawlers on the web. It collects vast amounts of SEO data, such as backlinks, keyword rankings, and organic traffic, for Ahrefs service users. Especially, to build its backlink database, it crawls the internet extensively, making it one of the most noticeable bots after

Googlebotin web server logs. It’s shown withUser-AgentasAhrefsBot.

Distinguishing Harmful Bots from Beneficial Bots

The impact of bot traffic on a website varies greatly depending on the bot's purpose and behavior. Harmful bots can deplete website resources, steal data, or exploit security vulnerabilities.

Characteristics of Beneficial Bots

-

Compliance with

robots.txt: Most beneficial bots respect the website’srobots.txtfile and follow the rules specified therein (crawl-allowed / disallowed areas, crawling speed, etc.). -

Normal Request Patterns: They do not overload the server by applying appropriate delays between requests.

-

Formal

User-Agent: They use clear and well-knownUser-Agentstrings, often including information about the bot's owner (e.g.,Googlebot/2.1 (+http://www.google.com/bot.html)). -

Legitimate IP Addresses: They make requests from ranges of IP addresses owned by the bot's owner. (e.g., Googlebot uses Google's owned IP ranges)

Characteristics of Harmful Bots

-

Ignoring

robots.txt: They ignore therobots.txtfile and attempt unauthorized access to disallowed areas. -

Abnormal Request Patterns: They overload the server with repetitive requests to specific pages in a short timeframe (attempting DDoS attacks), or abnormally fast crawling speeds.

-

Falsified

User-Agent: They disguise themselves as beneficial bots (e.g., by usingUser-Agentof Googlebot) or utilize randomly generatedUser-Agentstrings. -

Unknown IP Addresses: They hide their IP addresses or frequently change them by using proxy servers, VPNs, or zombie PCs.

-

Malicious Activities:

-

Content Scraping: They illegally copy a website's content to post or resell on another website.

-

Inventory Sniping: They quickly assess inventory on e-commerce sites to exploit for hoarding.

-

Credential Stuffing: They attempt login with stolen user account information on other websites.

-

Spam Registration: They automatically register spam content in forums or comment sections.

-

DDoS Attacks: They generate massive traffic to incapacitate websites.

-

Vulnerability Scanning: They automatically scan for known security vulnerabilities to identify attack points.

-

How to Effectively Block Harmful Bots

Malicious bots attempt to breach websites by ignoring rules, such as robots.txt, while masquerading as genuine users. Therefore, blocking them requires advanced strategies to detect their abnormal behavior patterns and selectively block those requests.

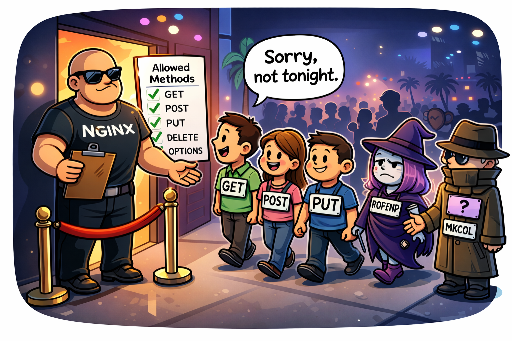

1. IP Address and User-Agent Based Blocking (Limited Initial Defense)

Temporary effectiveness may be observed when malicious traffic continuously arises from specific IP addresses or IP ranges, or when clearly harmful User-Agent strings are utilized.

-

Web Server Setup (Apache, Nginx):

- Blocking Specific IP Addresses:

# Nginx Example

deny 192.168.1.100;

deny 10.0.0.0/8;

- **Blocking Malicious `User-Agent`:**

# Apache .htaccess example

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} "BadBotString|AnotherBadBot" [NC]

RewriteRule .* - [F,L] # Return 403 Forbidden

Limitations: IP addresses can be easily bypassed since they are dynamically assigned, or can use proxies, VPNs, or Tor networks. Malicious bots can attempt attacks while frequently changing their IPs, making IP-based blocking a burdensome management process that is not a long-term solution. Similarly, User-Agent strings can be easily spoofed, making it difficult to block sophisticated malicious bots using this method alone.

2. Rate Limiting

This limits or blocks requests when the number of requests from a specific IP address, User-Agent, or specific URLs surge abnormally in a short time. This is effective in mitigating DDoS attacks or excessive scraping attempts.

- Web Server Setup (Nginx Example):

# Inside Nginx http block

limit_req_zone $binary_remote_addr zone=mylimit:10m rate=5r/s;

# Inside server or location block

location / {

limit_req zone=mylimit burst=10 nodelay; # Allows 5 requests per second, bursts up to 10

# ...

}

Complexity and Risks: Rate limiting is very useful, but setting appropriate thresholds can be quite tricky. If set too strictly, it can restrict normal user traffic or beneficial search engine bot traffic, reducing service accessibility. Conversely, if set too leniently, it could allow malicious bots to achieve their objectives. Continuous monitoring and tuning, based on an understanding of service characteristics and general traffic patterns, are necessary.

3. Behavior-Based Detection and Custom Defense System Development (Most Effective Strategy)

This is the most sophisticated and effective approach. While malicious bots can disguise their IP or User-Agent, their behavior patterns within the website tend to be distinctive. Analyzing these abnormal activities enables the identification of malicious bots and the development of defensive systems specific to those patterns.

-

The Importance of Traffic Monitoring and Log Analysis:

Web server logs (Access Log) are the most important data source for understanding bot behavior. Regularly reviewing and analyzing logs (at least once a day) is essential. Log analysis can reveal abnormal behaviors exhibited by malicious bots, such as the following:

-

Abnormal Navigation Path: Uncommon sequences of pages that a human wouldn't typically traverse, abnormally fast page transitions, or repeated access to specific pages.

-

Repeated Specific Task Attempts: Repeated login failures, attempts to log in with non-existent accounts, infinite form submissions, or using macros to reserve spots in scheduling systems.

-

Suspicious URL Requests: Repeated access attempts to known vulnerability paths (e.g.,

/wp-admin/,/phpmyadmin/,.envfiles) that do not exist on the website. -

HTTP Header Analysis: Abnormal combinations or orders of HTTP headers, or missing headers.

-

-

Examples of Encoding Malicious Bot Behavioral Patterns:

Defining behaviors of malicious bots noted in logs and implementing defensive logic based on these findings is crucial.

-

GET /cgi-bin/luci/;stok=.../shell?cmd=RCE- Description: This is an attack attempt exploiting the remote code execution (RCE) vulnerability of the

OpenWrtadmin panel, mainly targeting vulnerabilities in Linux-based systems used in routers or embedded devices.

- Description: This is an attack attempt exploiting the remote code execution (RCE) vulnerability of the

-

POST /wp-login.php(Repeated login attempts)- Description: This is an attempt for brute-force login or credential stuffing against the WordPress admin panel.

-

GET /HNAP1/- Description: This is a scanning and attack attempt exploiting the HNAP1 (Home Network Administration Protocol) vulnerability found in D-Link routers.

-

GET /boaform/admin/formLogin- Description: This is an intrusion attempt against the admin login panel of the

Boaweb server, typically used in older routers or webcams.

- Description: This is an intrusion attempt against the admin login panel of the

-

GET /.envorGET /.git/config- Description: This attempts to access the

.envfile or the.gitdirectory to extract sensitive information.

- Description: This attempts to access the

When these patterns are detected, actions like blocking requests or temporarily blacklisting the IP address can be implemented. This can be achieved at the server level through middleware (e.g., Django middleware) or settings in the web server (Nginx

mapmodule, Apachemod_rewrite, etc.). -

-

JavaScript Execution Check:

Most malicious bots do not fully execute JavaScript like an actual browser. Techniques like checking hidden JavaScript code (e.g., inducing clicks on specific DOM elements), canvas fingerprinting, or verifying browser API calls can be employed to identify bots and block clients failing to execute JavaScript.

4. Utilizing Honeypots

Honeypots are intentional 'traps' designed to lure in and identify malicious bots. Elements embedded within web pages are hidden from normal users but engineered for automated bots to explore or interact with.

-

Operation Principle:

-

Hidden Links/Fields: By using CSS (

display: none;,visibility: hidden;,height: 0;, etc.) or dynamically generated via JavaScript and moving them off the screen, links or form fields are generated that are completely invisible to users. -

Inducing Bot Behavior: Since a person using a normal web browser cannot see these elements, they will not be able to interact with them. However, malicious bots parsing all links or forms on the web page mechanically will discover and try to interact with these hidden elements (e.g., inputting values into hidden form fields or clicking hidden links).

-

Identifying Malicious Bots: If values are entered in the hidden fields or requests come through hidden links, this can be considered clear behavior of malicious bots.

-

Automatic Blocking: IP addresses or sessions exhibiting such behaviors can be promptly blacklisted or blocked.

-

-

Implementation Example:

<form action="/submit" method="post">

<label for="username">Username:</label>

<input type="text" id="username" name="username">

<div style="position: absolute; left: -9999px;">

<label for="email_hp">Leave this field empty:</label>

<input type="text" id="email_hp" name="email_hp">

</div>

<label for="password">Password:</label>

<input type="password" id="password" name="password">

<button type="submit">Login</button>

</form>

If there is a value in the `email_hp` field on the server, that request is deemed a bot and blocked.

- Advantages: It effectively identifies malicious bots without hindering user experience. It is relatively simple to implement and hard to spoof.

5. Web Application Firewall (WAF) and Specialized Bot Management Solutions

If operating large websites or sensitive services, employing professional WAF solutions (e.g., Cloudflare, AWS WAF, Sucuri) or bot management solutions (e.g., Cloudflare Bot Management, Akamai Bot Manager, PerimeterX) is the most effective approach. These solutions:

-

Advanced Behavior Analysis: They analyze real-time traffic using machine learning to detect subtle behavioral differences between humans and bots.

-

Threat Intelligence: They utilize global attack patterns and IP blacklist databases to identify widespread threats.

-

Minimizing False Positives: They filter out only malicious bots while minimizing false positives for beneficial bots and normal users through sophisticated algorithms.

-

Automated Response: They automatically block detected malicious bots, provide challenges, and take other actions.

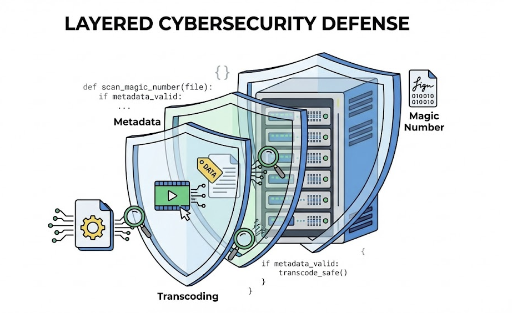

Conclusion: Proactive and Layered Bot Defense Strategy

Malicious bots continually evolve and seek to attack websites. The robots.txt file serves as guidance for honest bots, but cannot act as a shield against intruders. Thus, to ensure the security and stability of your website, a proactive layer defense strategy that analyzes and identifies bot behavior to selectively block only malicious bots is essential.

By implementing log analysis to identify abnormal behavior patterns, precisely setting rate limits, utilizing honeypots, and incorporating professional bot management solutions or WAF when necessary, you can better secure your website and maintain smooth interactions with beneficial bots, providing a pleasant service to users. What bot threats is your website most vulnerable to? It's time to consider appropriate defense strategies.

There are no comments.