1. Why Does My Server Stall Every Time I Deploy?

When running personal projects, you often end up on modest hardware—think GCP’s tiniest instances or a Raspberry Pi. I juggle a fleet of machines, from beefy AI inference servers to a VM that barely keeps a name server alive. I’m especially fond of the Raspberry Pi 5: it runs 24/7 with negligible electricity cost and offers solid performance.

If other servers feel like livestock you have to manage, the Pi feels more like a beloved pet.

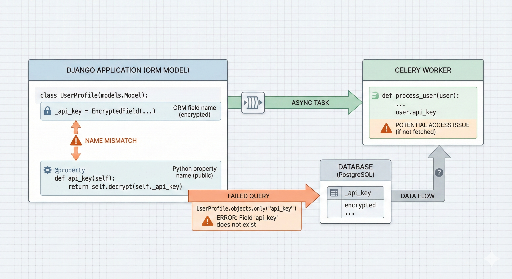

But overloading this little companion caused the CPU to hit 100 % during deployments, thanks to the Celery workers. When I launched both the Blue and Green environments for a zero‑downtime rollout, the number of workers doubled instantly, overwhelming the system. Reducing the worker count slowed processing, while keeping them all caused the server to crash—a classic catch‑22.

To solve this, I built a custom Blue‑Green deployment script that minimizes resource usage while preserving stability.

2. Strategy: Conserve Resources, Boost Reliability

A vanilla Blue‑Green deployment runs both environments side‑by‑side, but I needed to keep CPU load low. My approach:

- Pre‑emptively stop background services (Celery): Before the new web server starts, shut down the heavy background tasks (workers, beat) from the old version to free up CPU.

- Staged startup: Bring up only

Web + Redisfirst, run health checks, and only then launch the rest. - Human‑in‑the‑loop: After automation finishes, an admin manually verifies everything before the old version is finally removed.

The root cause was that Celery workers, upon initialization, immediately received and executed a burst of tasks, spiking CPU usage. Lowering Celery’s concurrency would have helped, but it would also slow asynchronous processing during the rollout—something I didn’t want.

The idea that clicked was: pause the existing Celery workers just long enough to free up CPU, deploy the new code, then resume them, achieving zero‑downtime without overloading the machine.

3. Core Code Walkthrough

You can find the full script in my GitHub repository. Below are a few key snippets.

① Isolating Projects with Docker Compose

Using the docker compose -p flag, I create two separate projects—blue and green—from the same compose file.

dc() {

# Dynamically set the project name (-p) for isolated environments

docker compose -f "$COMPOSE_FILE" "$@"

}

② Rigorous Health Checks

Traffic is never switched until the new version passes a health check.

health_check() {

# Retry up to 10 times, ensuring the target port returns 200 OK

if curl -fsSIL --max-time "$HEALTH_TIMEOUT" "$url"; then

ok "Health check passed"

return 0

fi

}

③ Graceful Rollback on Failure

If the new version misbehaves, the script quickly brings the old services back online, avoiding any outage. Instead of force‑killing Celery workers and beat, I use a lightweight stop to reclaim CPU while keeping the processes ready for an instant restart.

4. Operational Tip: The Art of “Verify‑Then‑Delete”

The script ends by prompting the admin to manually confirm the deployment before cleaning up the old version.

"Deployment succeeded. Please log in and verify everything. If all looks good, copy the command below to clean up the previous version."

That single safeguard catches the 1 % of errors automation might miss.

5. Closing Thoughts

This script embodies the compromises required to squeeze maximum efficiency out of limited resources. While it’s a modest piece of code, it’s been a reliable workhorse for me, and I hope it helps other small‑scale developers facing similar constraints.

Related Links:

- Direct link to the GitHub repository

- Creating Your Own Automated Deployment System with GitHub Webhooks ⑤ Nginx, HTTPS Configuration, and Final Integration

- Feel free to leave comments or open an Issue on GitHub for any questions!

There are no comments.