NVIDIA DGX Spark - The Birth of a Compact GPU Server for On-Premise AI

In May 2025, NVIDIA is expected to announce a small-form high-performance GPU server named DGX Spark as the new standard for AI infrastructure. Although the official release date has not yet been confirmed, let's take a look at the specifications that have been disclosed and how this product can be utilized in AI-related businesses.

What is DGX Spark?

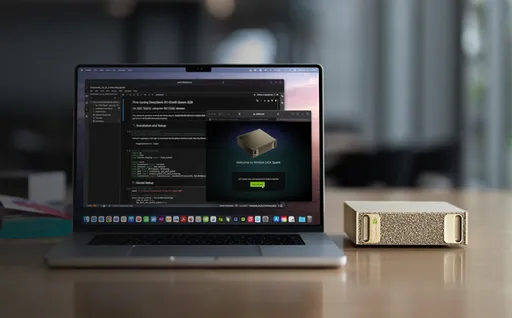

DGX Spark continues the philosophy of NVIDIA's existing DGX systems (e.g., DGX H100, A100) and offers a powerful on-premise AI solution in a compact form factor.

✅ Key Objectives

- Establishing in-house AI infrastructure for AI startups, SMEs, and research institutions

- A cloud alternative in environments where data privacy and data sovereignty are critical

- Optimizing experiments and inference with a low-power, low-noise, desktop-level GPU server

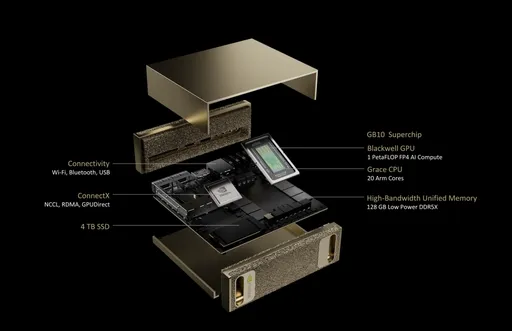

Expected Specifications of DGX Spark (Based on Disclosed Information)

| Item | Specifications (Estimated or Based on Leaks) |

|---|---|

| GPU | 1-2 NVIDIA Blackwell-based GPUs (e.g., B100 or GB200) |

| Memory | 128GB - 192GB HBM3e |

| Storage | High-speed NVMe SSD (expandable in TBs) |

| Network | 10/100Gb Ethernet or possible NVLink support |

| Power Consumption | Estimated 800W - 1200W |

| Form Factor | Desktop tower or 4U rack mount |

⚠️ Specifications will be updated upon official release.

Key Application Areas of DGX Spark

1. Local AI Model Training and Fine-tuning Platform

- Building small to medium-sized LLMs and vision models in-house

- In-house AI R&D experimental environment based on GPU servers

2. On-Premise AI Inference Infrastructure

- Suitable for building private chatbots, document search, and edge AI analysis servers

- Expected demand in industries needing AI data security and cloud alternatives

3. Edge AI Deployment and Industrial Automation

- Well-suited for edge computing environments in manufacturing, finance, and healthcare

- Can achieve powerful GPU-based inference optimization when combined with NVIDIA AI Enterprise

4. GPU Experimental Infrastructure for Educational/Research Institutions and Startups

- Achieve performance expectations with a small GPU server instead of a high-cost DGX

- A realistic solution for users wanting to replace cloud GPU costs

Why is DGX Spark Gaining Attention in the Industry?

- The on-premise AI infrastructure market is growing, with increasing demand to avoid cloud risks

- Growing interest in compact high-performance equipment in the GPU server market

- Surge in demand for local inference servers due to data sovereignty and security issues

- In line with trends in edge AI, AI inference optimization, and private cluster operations

Conclusion: Who is the AI GPU Server for?

DGX Spark is: - An AI supercomputer on your desk, not just for large data centers - A realistic choice for teams pursuing a cloud + on-premise hybrid strategy - Broadly applicable to AI R&D, security-sensitive services, and industrial edge deployments

NVIDIA DGX Spark is more than just a server. At a time when computing in the AI era is transitioning from the cloud back to local, there's a strong possibility it will become the standard for private GPU infrastructure.

There are no comments.