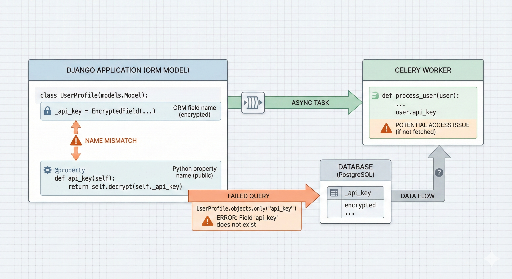

1. Issue Overview

In a Django environment, using transaction.on_commit() to call Celery tasks leads to an issue where

data in ManyToMany fields appears empty within the Celery task.

2. Phenomenon

- After completing

post.categories.add(...)andpost.tags.add(...) - Calling

translate_post.delay()via thetransaction.on_commit()callback - When querying

post.categories.all()andpost.tags.all()inside the Celery task, it returns a blank list ([])

3. Environment Configuration

- WRITE: PostgreSQL master on GCP VM

- READ: PostgreSQL replica (streaming replication) on Raspberry Pi

- Configured to send read requests to the replica via Django DB router

- Post model utilizing ManyToMany relationship fields

4. Log Analysis

✅ Summary of Events (based on example time)

13:13:56.728— Post created13:14:00.922— LastTaggedItem(ManyToMany) query13:14:01.688—on_commit()execution → Celery call13:14:01.772— Query results intranslate_post():- categories:

[] - tags:

[]

- categories:

Thus, the order is correct, but the content is not reflected

5. Cause Analysis

✅ Delay in PostgreSQL Replica

- The default replication of PostgreSQL operates asynchronously (async)

- Changes in the Master DB are reflected in the Replica with a delay of ms to hundreds of ms

- The records of the ManyToMany intermediate table connected via

add()had not been reflected in the replica yet

✅ How Django's on_commit() Works

on_commit()is executed immediately after the Django transaction is committed- However, Celery operates in a separate process, using the replica DB for reading

- As a result, the replica is still in the pre-update state at the time of the on_commit

6. Verification Points

- Post creation logs exist

- The add() query logs also executed correctly

- The actual query order and task invocation sequence is correct

- The issue stems from the fact that the DB at the time of querying was the replica

7. Solutions

1. Force reading from Master DB in Celery tasks

# ORM method

post = Post.objects.using('default').get(id=post_id)

tags = post.tags.using('default').all()

categories = post.categories.using('default').all()

2. Configure a Celery-specific DB Router

In settings.py, you can set it to always use the master for Celery tasks

3. In cases where the replica must be up-to-date:

- Need to set PostgreSQL's

synchronous_commit = on(may degrade performance)

8. Conclusion

This issue is not a problem with Django, but a result of the delay in asynchronous replication environments colliding with the ORM's read precedence policy.

The key to the solution is to ensure that Celery tasks use the master DB.

9. Jesse's Comment

"While

on_commit()definitely executed at the correct timing, the fact that the read DB was the replica was at the core of the issue.

Django and Celery are not at fault. Ultimately, it's the developer's responsibility to understand and manage system architecture."

There are no comments.