Even if you're not a developer, you've probably heard the term 'ASCII' at least once. You might have encountered text that appears garbled, looking like a foreign language while browsing the web, or perhaps you've seen a technical error message stating "ASCII code is not compatible."

What exactly is this ASCII code that is treated like the 'common language' of the digital world? Today, let's explore the foundational agreement that allows computers to understand human language: the ASCII code.

1. Computers Only Understand Numbers

The characters we see on the screen as 'A', 'B', 'C' are not actually images or letters to a computer. This is because computers are essentially just calculators that can only understand 0s and 1s (binary).

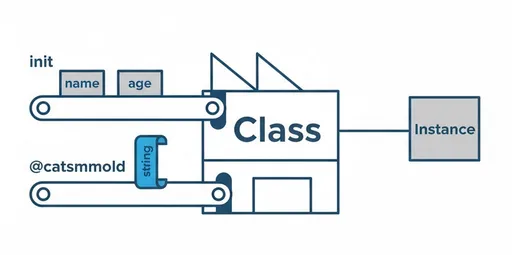

So how are the characters we type on the keyboard stored in the computer? A "promise" is needed between humans and computers.

"Let's agree that the number 65 represents the letter 'A.'"

"And that 97 represents the lowercase 'a.'"

The table that systematically organizes this agreement is known as ASCII (American Standard Code for Information Interchange). As the name implies, it is the American standard code for information exchange.

2. The Structure of ASCII Code: The Magic of 7 Bits

ASCII code was established in the 1960s. At that time, computer resources were extremely limited, so it was necessary to represent characters as efficiently as possible. Hence, the choice of 7 bits.

-

A total of 128 characters: The number of combinations that can be represented with 7 bits is $2^7$, representing a total of 128, ranging from 0 to 127.

-

Components:

-

Control characters (0~31): These are not displayed on the screen but are responsible for communication control, line feeds, tabs, etc.

-

Printable characters (32~127): This includes special symbols, digits (0-9), Latin uppercase letters (A-Z), and lowercase letters (a-z).

-

3. Why Do We Still Use ASCII?

Modern technology has advanced tremendously, yet ASCII code remains the root of the digital world. What are the reasons?

1) The King of Compatibility

Even the most modern and widely used character encoding method, UTF-8, is perfectly compatible with ASCII code. In a UTF-8 document, the English letter 'A' has the same data as the ASCII code. This ensures that no issues arise when old legacy systems exchange English data with the latest systems.

2) The Foundation of Programming

When dealing with characters in programming, the rules of ASCII values are very useful.

-

'A' is 65, 'a' is 97.

-

The difference between these two values is exactly 32.

Developers use these numerical rules to convert between uppercase and lowercase or efficiently sort data.

3) The Lightest Data

Because all characters are processed within 1 byte, documents written only in English (Latin characters) are very small in size and fast to process. This is why most of the world's internet communication protocols (HTTP, etc.) are based on ASCII.

4. ASCII's Legacy: ASCII Art

ASCII code transcended simple communication protocols to create a culture. In the era of terminal environments that didn't support graphics, people drew pictures using only keyboard characters. In a Japanese drama from the late 1990s to early 2000s called "電車男" (Densha Otoko), there is a character who creates stunning ASCII art.

|\---/|

| o_o |

\_^_/

This culture of witty expression using just 128 limited characters can still be found in developers' source code comments or email signature lines today.

5. ASCII's Critical Limitation: The World Doesn't Only Use English

However, ASCII has a fatal flaw. Its very name, "American Standard," is indicative of this limitation.

In a 7-bit container, there simply wasn't any room for Asian characters like CJK (Chinese, Japanese, Korean), as well as characters from numerous non-Latin cultural spheres such as Arabic, Hindi, Cyrillic.

As a result, in the past, every language group had to create its own encoding methods (like EUC-KR, Shift_JIS, Big5, etc.). When countries using different methods exchanged data, characters often became garbled, resulting in unreadable symbols like 뷁 or □□, leading to the common occurrence of Mojibake (character garbling).

Ultimately, a new standard that could unify all the world's characters was needed, leading to the creation of Unicode. Interestingly, the very first positions (0-127) of this vast Unicode system are still occupied by ASCII code, honoring the origins of digital history.

Summary

-

Definition: An initial standard agreement that matched numbers (0-127) with characters for computers to understand.

-

Features: Includes only Latin alphabet letters, digits, and basic special characters.

-

Limitations: Cannot represent non-Latin characters like CJK, Arabic, etc.

The ASCII code is not merely a sequence of numbers. It contains the efforts and efficiency of early computer scientists who tried to translate human language into machine language, making it the most basic alphabet of the digital age.

Upcoming Post Announcement

We will explore the principles of Unicode, which transcends the narrow confines of ASCII code to encompass all languages and even emojis (😊). If you're curious about the question, "Why does my text get garbled?" stay tuned for the next post!

There are no comments.