Recently, I fine-tuned the FLUX 1-dev (12B) model to create character models for a service. I will share the data and trials I encountered while training this massive model with 12 billion parameters on the low-power ARM-based DGX Spark.

1. Training Environment and Settings

-

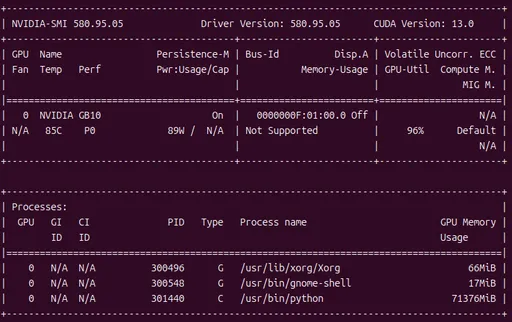

Hardware: DGX Spark (120GB Unified Memory)

-

Model: FLUX 1-dev (12B Parameters)

-

Dataset: 40 character images (1024px resolution)

-

Training Settings:

-

Batch Size: 1

-

Gradient Accumulation: 4

-

Epochs: 100

-

Total Steps: 1000

-

2. Expectation vs Reality: The Failure of Time Management

NVIDIA's reference stated, "Training 13 images for 1000 steps takes about 90 minutes". Based on this, I expected to complete the training with 40 images in about 4-5 hours at most, or 2-3 hours at best.

However, the actual time taken was about 8 hours.

Analysis: > Number of images and resolution: The dataset of 40 images coupled with the 1024px high-resolution setting added to the burden.

- Time per Step: An average of 28 seconds was required to process 1 Epoch (10 steps). This means it took about 7 seconds to train a single image.

3. OOM (Out Of Memory) and Memory Management

I made the first mistake by being overconfident about the 120GB unified memory.

The existing services running on the server were the issue.

-

GPT-OSS (20B): 32GB reserved allocation

-

ComfyUI: 16GB allocated

When I started training in this state, the Linux kernel forcibly terminated (killed) the process. About 66GB of free space was insufficient to handle the training of the 12B model (Gradient calculations, etc.). Eventually, I could only achieve stable training after shutting down all background services.

- Actual memory usage during training: approximately 71.4GB (sustained)

4. Amazing Power Efficiency of ARM Architecture

If it had been a high-performance GPU based on x86, the power consumption would have been significant. However, the efficiency of the DGX Spark was remarkable.

-

Peak Power: 89–90W

-

Temperature: 84–86°C (stabilized to 81–83°C after using a mini fan)

The fact that I could train the 12B model at full load while consuming only about 90W indicates promising potential as an edge or on-device AI server.

5. Bottleneck Discovery: Text Encoder (TE) Offload to CPU

I discovered a critical reason for the longer-than-expected training time.

The FLUX model uses two text encoders, CLIP-L and T5-XXL, and monitoring showed that one of them was running on the CPU instead of CUDA.

-

Phenomenon: One TE allocated to CUDA, one TE allocated to CPU

-

Impact: Bottlenecks caused by the speed difference in data transmission and computations between CPU and GPU

Even though there was no out-of-memory (OOM) situation, it is necessary to analyze the reasons for why offloading occurred to the CPU in the settings. In the next training, I plan to forcibly allocate both encoders to CUDA to attempt speed improvements.

6. Conclusion and Future Plans

Through this test, I realized that 100 Epoch / 1000 Step is an excessive configuration.

-

Improvement Direction: Reducing the number of images to around 30 or adjusting the training volume to 300-400 Steps even if the count is maintained seems more efficient.

-

Hardware: The ability to fine-tune a massive model with just 90W of power is very attractive, but cooling solutions (like a mini fan) are essential. Running at 86-87 degrees for 8 hours was a bit nerve-wracking.

Next Step: There are about 2 hours and 30 minutes left until training is complete. As soon as the training ends, I will attach the generated LoRA adapter to FLUX and share the actual performance test results. Stay tuned for the next post.

There are no comments.