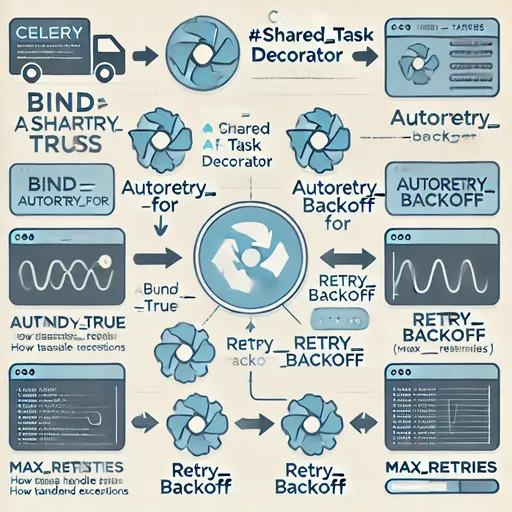

Celery is a powerful framework that supports asynchronous task processing. The @shared_task decorator is used to define tasks and leveraging options like bind, autoretry_for, retry_backoff, and max_retries can significantly enhance the reliability of tasks and automate error handling.

In this article, we will explore how each option works and how to use them, along with the common confusions that arise, and the best ways to resolve them.

1. bind=True

Definition

bind=True allows the current task to be passed as the first argument, enabling the use of self within the task. This provides access to the task's state, methods, attributes, etc.

Key Features

- Access to Task State: You can access the task ID, request information, etc., to monitor or log the state.

- Explicit Retry Logic:

Implement retry logic manually through the

self.retry()method.

Example

@shared_task(bind=True)

def my_task(self, some_arg):

print(f"Task ID: {self.request.id}") # Print task ID

self.retry() # Retry the task2. autoretry_for=(ExceptionType, ...)

Definition

Configures Celery to automatically retry the task when a specified exception type occurs. It automates error handling and retries without requiring the developer to explicitly call self.retry().

Points to Note

- When Using

autoretry_for: As retries are done automatically, ensure not to useself.retry()redundantly. - Issues with Mixing:

Using

autoretry_forandself.retry()together can cause duplicate retries for the same exception.

Example

Recommended Approach: Using Only autoretry_for

import requests

@shared_task(bind=True, autoretry_for=(requests.RequestException,), retry_backoff=True)

def my_task(self, url):

response = requests.get(url)

response.raise_for_status() # Raise exception if status code is not 200Using Explicit Retry under Specific Conditions (self.retry())

import requests

@shared_task(bind=True, retry_backoff=True, max_retries=5)

def my_task(self, url):

try:

response = requests.get(url)

response.raise_for_status()

except requests.RequestException as e:

print(f"Retrying due to error: {e}")

self.retry(exc=e) # Explicit retry3. retry_backoff=True

Definition

Enables Exponential Backoff which progressively increases the interval between task retries. The first retry occurs immediately, followed by intervals of 1 second, 2 seconds, 4 seconds, and so on.

Key Features

- Reduces server load and efficiently handles network failures.

- The backoff time can be customized through Celery's default settings.

Example

@shared_task(bind=True, autoretry_for=(requests.RequestException,), retry_backoff=True)

def my_task(self):

# First retry after 1 second, second retry after 2 seconds...

raise requests.RequestException("Simulated failure")4. max_retries

Definition

Limits the maximum number of retries for a task. If the task fails to succeed after the specified number of attempts, it is recorded as a failure.

Key Features

- Prevents infinite retries, thereby limiting server resource consumption.

- Depending on failure conditions, tasks can be logged or other logic executed.

Example

@shared_task(bind=True, autoretry_for=(requests.RequestException,), retry_backoff=True, max_retries=5)

def my_task(self):

raise requests.RequestException("Simulated failure")5. Cautions When Mixing: autoretry_for vs self.retry()

Proper Usage Guidelines

- When Using

autoretry_for: Since automated retries are set up, there’s no need to explicitly callself.retry(). This allows for simpler code when retrying tasks for specific exceptions. - When Using

self.retry(): Use this when additional work (e.g., logging, condition checking) is needed before retrying. Ensure to avoid redundancy withautoretry_for.

6. Summary of Options

| Option | Description |

|---|---|

bind=True |

Provides access to task state and methods via self. |

autoretry_for |

Automatically retries tasks when specific exceptions occur. |

retry_backoff |

Activates exponential backoff to gradually increase retry intervals. |

max_retries |

Limits the number of retries for task failure conditions. |

7. Conclusion

Celery's @shared_task options are useful for effectively handling task failures and enhancing reliability.

- When using

autoretry_for: Take care to avoid redundancy withself.retry(). - If conditional logic or additional work is required,

self.retry()can be utilized.

To implement tasks reliably using Celery, combine these options and write optimized code tailored to your situation! 😊

There are no comments.