In the last two posts, we deeply explored the history of CPU architecture (x86, x64) and the rise of ARM. Today, let's discuss a company that stands at the pinnacle of this CPU narrative, which has recently become a hot topic: NVIDIA.

For a long time, NVIDIA has reigned as the absolute leader in the GPU (Graphics Processing Unit) market. However, recently, they are extending their influence beyond just GPUs into the CPU market, rewriting the future of computing. Let's find out why NVIDIA is venturing into CPUs and what this means in the age of AI.

1. Wasn't NVIDIA originally a GPU company? What’s the relationship with CPUs?

Yes, that's correct. NVIDIA is primarily known for its GeForce (gaming) and Quadro/RTX (professional) GPUs, and recently, the A/H series GPUs for data centers. GPUs process calculations differently than CPUs.

-

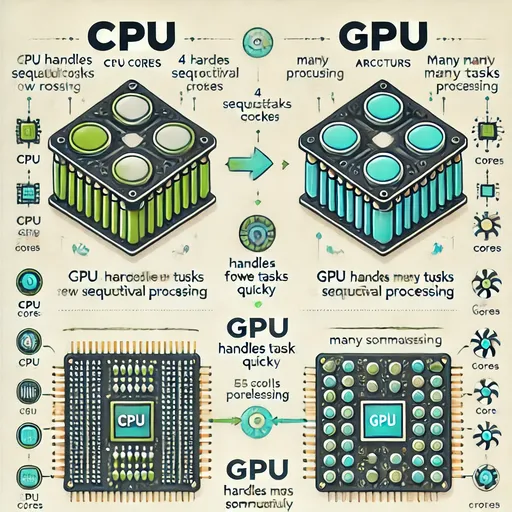

CPU: Specializes in quickly and efficiently handling complex sequential single tasks. (e.g., running operating systems, document work, web browsing)

-

GPU: Operates thousands of small cores in parallel to handle simple but repetitive large-scale computations very effectively. (e.g., graphic rendering, physical simulations, and AI training/inference)

For a long time, CPUs and GPUs complemented each other's roles in computer systems. The CPU served as the 'brain' of the entire system, directing operations, while the GPU handled graphic tasks or specific parallel computations like an 'expert'.

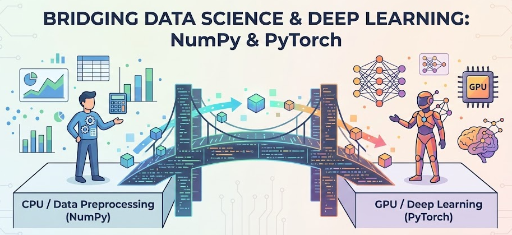

However, as the AI era dawned, their relationship began to change. AI tasks that require training vast amounts of data and calculating complex neural networks were nearly impossible without the parallel processing capabilities of GPUs. NVIDIA recognized this potential early on and developed the CUDA parallel computing platform, opening the pathway for GPUs to be utilized not just as graphic cards but as general-purpose computing devices.

2. The Limits of GPUs and the Need for CPUs: The Inefficiency of 'Data Movement'

While it is clear that GPUs are critical to AI computations, the data movement between CPUs and GPUs remains a bottleneck.

- Data Transfer between CPU ↔ GPU: In the AI model training or inference process, the CPU prepares the data, the GPU processes that data, and then the results are sent back to the CPU, repeating this process. This data movement consumes more time and power than expected. Since CPUs and GPUs are physically separated, interfaces like PCIe (PCI Express) used for data transfers sometimes cannot keep up with the immense processing speed of GPUs.

NVIDIA began to feel the need for their own CPU that would sit alongside the GPU to overcome these limitations and provide a computing solution optimized for the AI era. No matter how excellent the GPU is, if there isn’t a CPU capable of effectively supporting the GPU and facilitating quick data transfers, the overall system efficiency will inevitably decline.

3. The Arrival of NVIDIA's CPU: The ARM-Based 'Grace' Processor

Instead of directly developing x86 CPUs, NVIDIA focused on the ARM architecture, which offers excellent power efficiency and customization options. In 2022, they officially announced their entry into the CPU market with the kind of data center CPU called 'NVIDIA Grace'.

-

Based on ARM Neoverse: The Grace CPU is designed based on ARM's server core 'Neoverse'. This strategy indicates NVIDIA's approach to quickly enter the market by leveraging proven ARM technology rather than diving into CPU core design themselves.

-

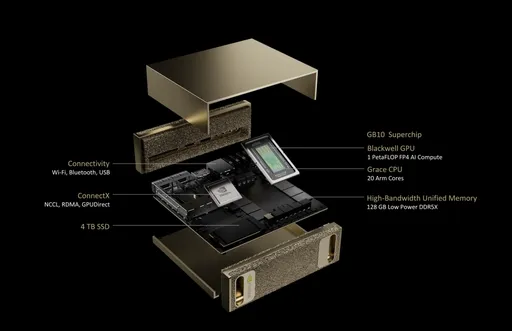

Core of the 'Superchip' Strategy: The true strength of Grace CPU goes beyond its standalone performance; it is revealed when combined with NVIDIA's GPU in a 'superchip' form.

-

Grace Hopper Superchip: This product connects the Grace CPU with the powerful H100 Hopper GPU using a high-speed interconnect called NVLink-C2C. This connection minimizes data bottlenecks between the CPU and GPU by providing a bandwidth much faster than traditional PCIe (over 900GB per second).

-

Grace Blackwell Superchip: This is a combination of the latest Blackwell GPU architecture and the Grace CPU, designed for next-generation AI and HPC (high-performance computing) workloads.

-

This 'superchip' strategy illustrates NVIDIA's vision to establish a far more efficient and powerful integrated system by directly supplying a custom CPU optimized for GPU computations, rather than using traditional x86 CPUs alongside NVIDIA GPUs.

4. The Shift in the CPU Market and Future Outlook

The introduction of NVIDIA's Grace CPU has created a new competitive landscape in the CPU market, which was previously divided between x86 (Intel, AMD) and ARM (Qualcomm, Apple, etc.).

-

Optimization for the AI Era: NVIDIA is focusing on data center CPUs specialized for AI and HPC workloads rather than the general-purpose CPU market. This is a strategy to respond to the explosively increasing global demand for AI computing.

-

Competition and Cooperation: Intel and AMD are competing with NVIDIA in the AI accelerator market, while also needing to develop x86 CPUs that work well with NVIDIA GPUs, leading to a complex relationship. There are even rumors that NVIDIA may sometimes utilize Intel’s foundry services, suggesting various collaboration and competition models for technological development and market positioning.

-

The Importance of Integrated Solutions: Moving forward, it will become even more important how well CPUs, GPUs, memory, and interconnects integrate organically to deliver optimized performance for specific workloads, going beyond the performance of individual components. NVIDIA's 'superchip' strategy exemplifies this kind of integrated solution.

In Conclusion

Today, we explored why the GPU market giant NVIDIA has entered the CPU market, and how they aim to change the computing paradigm of the AI era through the 'Grace' CPU and 'superchip' strategy. We can see that beyond the history of CPUs and GPUs complementing one another, they are now moving towards a more closely integrated and combined future.

It remains to be seen what results and changes NVIDIA's CPU challenge will bring, how the existing x86 giants will respond, and ultimately, what the future of AI computing will look like. In the next post, we will return with another fascinating tech story. Thank you!

There are no comments.