What’s the Difference between RTX 4090 and DGX Spark – A Comparative Analysis for Companies Considering On-Premise AI Infrastructure

If you're a company or developer considering building an AI infrastructure, you may have wondered at some point, “Is DGX Spark really necessary when RTX 4090 is enough?”

This article is a cautious exploration of that question. As someone who recently reserved a DGX Spark and is waiting for delivery, I’ve summarized my limitations and expectations based on my experience with an RTX 4090-based environment.

GPU Server vs High-Performance Workstation – Which Infrastructure is More Suitable for AI Optimization?

| Category | RTX 4090 Workstation | DGX Spark |

|---|---|---|

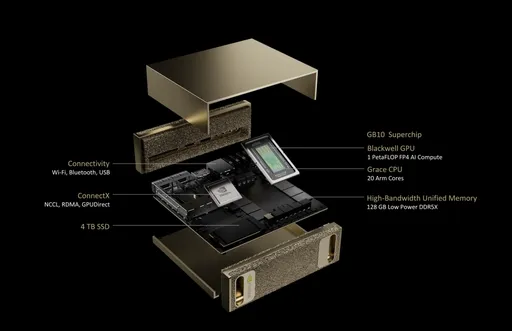

| GPU Architecture | Ada Lovelace | NVIDIA Blackwell |

| CPU | External CPU | 20-core Arm (10×Cortex-X925 + 10×Cortex-A725) |

| Memory | 24GB GDDR6X | 128GB LPDDR5x (Unified Memory) |

| Memory Bandwidth | 1,008 GB/s | 273 GB/s |

| Storage | User-configured SSD | 1TB or 4TB NVMe M.2 (Encryption Supported) |

| Network | Optional | 10GbE + ConnectX-7 Smart NIC |

| Power Consumption | Approximately 450W–600W | 170W |

| System Size | ATX or Tower | 150 x 150 x 50.5 mm (1.2kg) |

| Operating System | User-installed Windows or Linux | NVIDIA DGX Base OS (Ubuntu-based) |

▶ Key Difference: More than just performance, “Was it designed for AI?” is the core question.

Key Differences in the Industry: Optimizing On-Premise AI Infrastructure

Companies considering adopting AI infrastructure are not just focusing on simple computational speed. The following industry-specific differences become the actual criteria for selection:

- AI Data Security and Data Sovereignty: Essential for processing data that cannot be sent to the cloud

- Edge AI Deployment: Surge in demand for private GPU servers in manufacturing/finance/healthcare sectors

- AI Inference Stability: Capable of 24/7 inference operations with server-grade power design

- Cluster Integration: The structure of Grace CPU + Blackwell GPU allows for high-density computing as a single machine

In this context, DGX Spark is not just a simple GPU equipment, but a private AI infrastructure solution.

Total Cost of Ownership (TCO) and Investment Efficiency – What is the ROI of On-Premise AI Infrastructure?

| Comparison Item | RTX 4090 Single System | DGX Spark |

|---|---|---|

| Initial Equipment Price | Approximately $3,500 ~ $5,000 | $4,000 (according to NVIDIA's official announcement) |

| Operating Costs | Self-managed considering power, heat | Power efficiency and maintenance cost savings with ARM-based low power design |

| Investment Target | Personal or small-scale experiments | Corporate model training/inference platform |

▶ While prices are similar or DGX Spark may be lower, considering the operational efficiency and system integration value, it offers significant long-term competitiveness in total cost of ownership (TCO).

Individual Developers? Startups? Who Is More Suitable?

- Cases Where RTX 4090 is More Suitable:

- Models are small and focus on personal learning and experimentation

- Developers using a mix of cloud solutions

-

Cost-sensitive freelancers/researchers

-

Cases Where DGX Spark is Suitable:

- Enterprises needing in-house deployment/tuning of LLM and data sovereignty protection

- Industry users wanting to increase ROI compared to the cloud

- Teams looking to self-operate 24-hour inference services

Conclusion: Currently "Pending", but the Criteria for Selection is Clear

The RTX 4090 is still an excellent GPU. However, I am currently waiting in anticipation of the “standardization of AI servers” that DGX Spark is expected to bring.

More important than just faster speed is: - What system to base AI operations on - How to build AI infrastructure safely, sustainably, and cost-effectively.

Looking forward to the day I can leave my own usage experience, I hope this comparison serves as a criterion for wise choices.

There are no comments.