Backpropagation: How AI Traces the Culprit of Errors (feat. the Chain Rule)

1. Introduction: The Culprit Is Inside

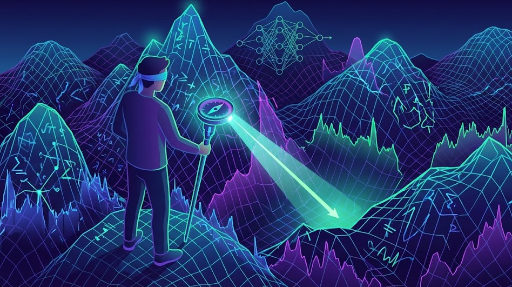

In the previous post we learned that differentiation is a "compass that tells you which direction to turn the parameters to reduce the error."

But deep learning has a fatal flaw: the model is too deep.

The number of layers from input to output can be in the dozens or hundreds.

Scenario: The loss explodes at the output layer with a difference of about 10 from the correct answer.

Question: Who is actually at fault?

It’s impossible to tell whether a parameter near the input caused a butterfly effect, or a parameter just before the output layer made a last‑minute mistake.

Deep learning solves this with a clever method: "blame the one right behind you (Chain Rule)."

2. The Chain Rule: A Mathematical Definition of "Passing the Buck"

In a math textbook the chain rule is written as:

$$\frac{dz}{dx} = \frac{dz}{dy} \cdot \frac{dy}{dx}$$

To us developers it looks like a formula, but from an organizational perspective it’s "distributing responsibility."

Imagine a decision chain: Team Lead (Input) -> Manager (Hidden) -> Director (Output).

- Director (Output) messes up the project (Error occurs).

- The Director refuses to take full blame and says, "The report from the Manager was a mess, so my result is also a mess." ($\frac{dz}{dy}$: how much the result was ruined by the Manager).

- The Manager protests, "No, the Team Lead gave me bad data, so my report is bad too!" ($\frac{dy}{dx}$: how much the report was ruined by the Team Lead).

Thus the overall impact of the Team Lead on the project ($\frac{dz}{dx}$) is calculated as (Director’s blame on Manager) × (Manager’s blame on Team Lead).

That’s the chain rule in action: it links a distant cause (A) to a distant effect (z) through multiplication of intermediate steps.

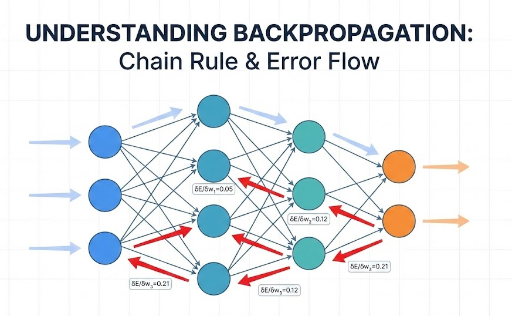

3. Backpropagation: Debugging in Reverse

Backpropagation extends this “passing the buck” process across the entire system.

- Forward Pass: Feed data and produce an output. (The work is done.)

- Loss Calculation: Compare to the correct answer and compute the error. (The crash occurs.)

- Backward Pass: Starting from the output layer, move backward toward the input, saying, "Because of you, the error is this much." (A kind of spell.)

For developers, this is identical to reading a stack trace from the bottom up to find the root cause of a bug.

You look at the final error message (Loss), then the function that called it, then the function that called that one… and so on, as if you were running git blame up the call chain.

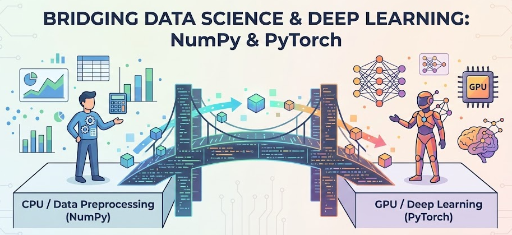

4. PyTorch’s Autograd: The Automated Audit Team

When we build deep learning with numpy, the most painful part is manually deriving and coding all those derivatives. (Matrix calculus can drive you bald.)

PyTorch’s greatness lies in mapping the complex “passing the buck” process onto a Computational Graph and handling it automatically.

- When you call

loss.backward(), - PyTorch’s Autograd engine springs into action.

- It starts from the end of the graph and visits every node in reverse, applying the chain rule (multiplication).

- It then tags each parameter (Weight) with its "responsibility share (Gradient) is 0.003."

All we need to do is update the values by the tagged amount (optimizer.step()).

5. Conclusion: What Happens When Responsibility Vanishes? (Vanishing Gradient)

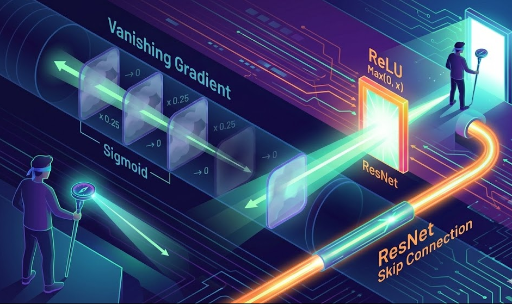

Even this seemingly perfect system has a fatal weakness.

When you keep passing responsibility (multiplying) through many layers, the responsibility can shrink to zero.

If you keep multiplying "not my fault" × 0.1 × 0.1 × 0.1 …, the early layers receive a signal that says "There’s nothing to fix (Gradient = 0)."

That’s the infamous vanishing gradient problem. How did modern AI overcome the winter of deep learning? (Hint: ReLU and ResNet.)

There are no comments.