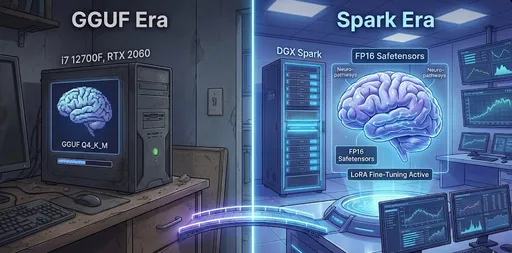

The experience of running LLMs (Large Language Models) in a local environment varies significantly based on hardware specifications. In this post, I want to share my experiences with the limitations I faced while running GGUF models on a mid-range PC, and how the introduction of the high-performance workstation Spark (DGX Spark) has transformed my local AI research environment.

1. The GGUF Era: The Aesthetics of Compromise and Efficiency

Before introducing Spark, my main setup included an i7 12700F CPU, 32GB RAM, and an RTX 2060 12GB. The only option for running a large language model on this setup was the GGUF (GPT-Generated Unified Format).

The Pitfalls of Quantization

At that time, my criteria for selecting models were not based on performance, but on 'operational feasibility'. Whenever I browsed Hugging Face, I habitually searched for the GGUF tag, particularly hunting for the Q4_K_M format.

- Q4_K_M: A format that compresses the model's parameters to 4 bits, reducing memory usage while minimizing performance degradation.

With the 12GB VRAM of the RTX 2060, I could barely run 7B models or had to offload to system RAM, enduring slow speeds. While I found enjoyment in simply getting it to work, it was clearly a compromised environment.

2. The Limitations and Doubts of Simple Inference

Running LLMs locally through GGUF was intriguing, but I soon hit a fundamental question.

"Is an on-premises environment really necessary if the only goal is inference?"

If the objective is merely to perform Q&A and generate text, there are already high-performance solutions such as Google Gemini and OpenAI GPT available. The cost of research APIs could be significantly cheaper than the investment in a personal workstation. The notion of running someone else's created model without any training or customization (Inference Only) weakened the justification for establishing a local environment.

3. The Introduction of Spark and the World of FP16

After investing around $4,000 to introduce a Spark machine, my AI research environment changed dramatically. The first thing that shifted was my attitude toward models.

Safetensors and Full Precision

No longer do I search for GGUF. Now, I directly download original models based on FP16 (Half Precision) as .safetensors.

Speed and Quality: The inference quality of the original model without quantization loss is certainly different. Additionally, thanks to the high-performance GPU, the inference speed is also quite comfortable.

- The real speed differences are striking.

- RTX 2060 Environment: Mistral 7B GGUF Q4_K_M → about 10–20 tokens/s

- Spark Environment (GPT-OSS-20B FP16): about 80–90 tokens/s

- Spark Environment (GPT-OSS-120B FP16): about 40–50 tokens/s

With a speed that is unattainable even in similarly priced laptops or PCs, it's a game-changer for local AI research to achieve this level of inference performance while using FP16 models without quantization loss.

Flexible Quantization: If necessary, I can directly attempt quantization to FP8 or FP4. Instead of relying on preconverted files, I've gained the ability to control according to my research objectives.

4. The True Value: LoRA Fine-Tuning

The core reason I consider the introduction of Spark to be "a truly great decision" is that I've ventured into the realm of training.

In my previous setup, even inference was challenging, making fine-tuning a distant dream. However, I can now directly apply LoRA (Low-Rank Adaptation), a concept I had only studied theoretically.

-

Real-World Experience: Preparing datasets, tuning hyperparameters, and fine-tuning the model provides a depth of experience that is incomparable to simple inference.

-

My Own Model: The ability to create a model specialized in a specific domain or in my writing style, rather than a general-purpose model, is the real charm of an on-premises environment.

An environment that allows for both inference and training is what justifies the $4,000 investment.

5. The Continued Value of GGUF

Of course, just because my environment has changed does not negate the value of GGUF.

The essence of GGUF lies not in ‘absolute performance’, but in accessibility.

It is almost the only format that enables the operation of large language models without expensive GPUs, using only CPUs or low-spec GPUs. However, due to the nature of quantization, precision loss and degradation in inference quality, as well as instability in processing long contexts, cannot be avoided. In other words, GGUF is a format that has the duality of being “a model that anyone can run” and the limitations of “quality, speed, and consistency”.

Moreover, GGUF remains an excellent format from the perspective of AI democratization. Not all users require fine-tuning, and not everyone can afford high-end equipment. I believe the GGUF ecosystem, which provides optimal inference performance in a standardized environment for light users, will become even more popular along with the advancement of personal computer performance. In a few years, we might see models in gguf format running on our tablet PCs or mobile phones.

Conclusion

If the time when I was running Q4_K_M models on the RTX 2060 was a 'tasting', then now, with Spark, I feel like I'm 'cooking'.

The world of local AI extends beyond merely running models; it offers true pleasure in the process of understanding, modifying, and making models your own. If you are craving an understanding of the model's structure and customization, hardware investment will be the most certain key to quenching that thirst.

There are no comments.