— How to Properly Prepare Image and Caption Structures

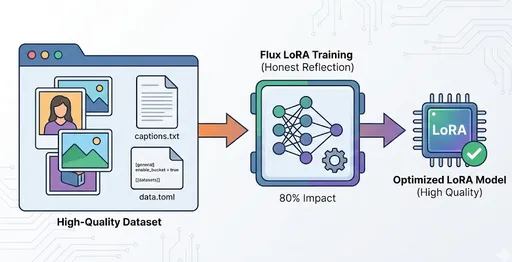

When starting with LoRA fine-tuning for the first time, everyone tends to focus on training options such as model, rank, and learning rate.

However, in reality, it is the Dataset that determines 80% of the outcome.

Good data can cover most setting errors, but poor data cannot recover the quality with any setting.

Particularly, recent Flux models are "models that reflect data very honestly," making them more susceptible to the quality of the Dataset.

This article summarizes how to prepare images, how to structure captions, and the relationship between data.toml settings and Dataset structure based on practical standards.

1. Why is the Dataset the Most Important in LoRA Fine-Tuning?

LoRA does not change the entire huge parameters of the base model,

but adds a “memory patch” that reinforces or adds specific concepts.

In other words, LoRA focuses on learning the following two aspects:

-

Commonalities among images

-

Core concepts specified in the captions

Thus, if the Dataset is even slightly off, the following problems can occur:

-

Faces become distorted

-

LoRA must have scale=0.5 or higher to barely apply styles

-

Results come out as “neither here nor there” due to mixing with the original model's features

-

Only specific poses or expressions are exaggeratedly reinforced

-

Colors are heavily skewed

The root cause of all these issues is the lack of consistency in data.

2. Basic Rules for Preparing Images

If you adhere to this, you've already succeeded halfway. Since tuning takes considerable time, it's best to prepare the data well in one go.

✔ 2-1) It's advisable to normalize image resolution

Flux-based models seem somewhat insensitive to resolution, but issues arise during fine-tuning:

-

512×768

-

1024×1536

-

800×800

-

1536×768

If sizes vary like this, the model may perceive the “important areas” as blurry or inconsistent in location.

Recommended specifications:

-

768×1152

-

768×1024

-

1024×1024

-

Or unify to one specification that fits the desired final image ratio

What's crucial is the consistency in ratios and sizes.

✔ 2-2) More isn't better for Datasets; homogeneity is better

Bad example:

- 10 selfies + 5 full body shots + 3 low-light photos + 2 4K high-quality photos + 5 cartoon style images

→ The model gets confused about what it should learn.

Good example:

-

Same camera distance

-

Same lighting

-

Clear concepts like face-centered / upper body-centered

-

Unified style (realistic/anime/illustration, etc.)

✔ 2-3) The most important principle is to include only “well-taken photos”

This becomes even more apparent after experimenting multiple times with Flux LoRA.

Common mistake:

“Data is scarce, so let's just include this too. It might help with learning.”

No.

That “something” ruins LoRA.

Often, just one blurry image can throw off the learning.

I can say this with complete certainty:

10 good photos > 40 mixed-up photos

✔ 2-4) The composition of images should be determined by the purpose of creating LoRA

For example:

1) LoRA for reproducing a specific character

-

Mainly close-up shots of the face

-

Same lighting

-

Front + slightly from the side only

-

Full body shots are generally less stable

2) LoRA for reproducing a specific fashion style

-

Same outfit set

-

Images emphasizing colors and textures

-

Full body/lower body shots may be included

-

Having various poses actually helps

3) LoRA for a specific illustration style

-

Including backgrounds

-

Images showing brush textures well

-

Must define the core elements of the style (line thickness, saturation, contrast, etc.)

3. Writing Captions: The Second Key to Determining LoRA Quality

Equally important as the images themselves are the captions.

Flux follows the “text signals” of captions very well, so

carefully structuring captions can greatly change LoRA quality.

✔ 3-1) How should captions be written?

The answer is one of the following two:

A. Minimal keyword tag-based approach

a japanese woman, long hair, smiling, outdoor, daylight

-

Simple and stable

-

Strong in realistic styles

-

Leads to easier convergence by LoRA

B. Sentence-based description approach

A Japanese woman with long black hair smiles softly in natural daylight, wearing a white knit sweater.

-

Promotes more natural learning in Flux or SDXL series

-

Suitable for style LoRAs or character LoRAs

For beginners attempting this for the first time, method A is recommended, but those who write well are encouraged to try method B. Based on my experiences, there have been times when I felt that method B was somewhat more effective.

Note: When using a sentence-based approach, ensure to set keep_tokens = 0, shuffle_caption = false in data.toml

✔ 3-2) Is it okay to have no captions at all?

-

Certainty answer: Always include them

-

Reason: You need to inform the model what concepts it should learn

-

However, I have seen examples of fine-tuning with only “class tokens and no sentence captions” in NVIDIA's documentation, but based on my experience, it's much more effective to include captions.

✔ 3-3) Prioritizing class_tokens vs txt captions

Important real-world information:

If txt captions exist → txt takes precedence.

class_tokens play a more auxiliary role.

This means that if both of the following are present for the same image:

class_tokens = "leona_empire asian woman"

caption.txt = "a japanese woman in winter knit"

→ The model will prioritize the caption.txt more.

Summary:

-

caption.txt is the core

-

class_tokens can be seen as the “basic foundation of the overall concept”

4. Example of Dataset Directory Structure

A neat example based on Flux series LoRA:

dataset/

└── concept1/

├── 00001.png

├── 00001.txt

├── 00002.png

├── 00002.txt

├── 00003.png

├── 00003.txt

└── ...

.txt file rules:

-

File names must match

-

UTF-8 recommended

-

Written in one line (no unnecessary line breaks)

5. Common Caption Mistakes That Ruin LoRA

1) Excessive modifiers

beautiful gorgeous extremely lovely asian woman with super long silky hair

The model gets excessively fixated on specific attributes.

2) Different style descriptions for each photo

-

One says “cinematic lighting”

-

Another says “bright soft lighting”

→ 100% confusion

3) Unnecessary emotions or moods that differ from the photos

Sometimes, when there are many photos, copying and pasting can lead to descriptions of emotions that don't match the photos. This can ruin everything!! It’s advisable to double-check at least twice after finalizing the DATA set for your mental health.

(Even if someone is smiling, if there are texts like sad or melancholy, the expression can change.)

6. Data Quantity: How Many Images Are Most Efficient?

Based on experience with Flux LoRA:

| Number of Data | Quality of Results |

|---|---|

| 5–10 | Unstable (large fluctuations) |

| 15–25 | Most efficient |

| 30–50 | Highest quality (only when _consistency in data_ is maintained) |

| 60 or more | No significant meaning. Increased redundant information |

-

Removing “bad images” enhances quality more than increasing data.

-

Personally, I initially used around 40 images, but later I tend to configure datasets between 10-20 images.

7. Handling Dataset When Fine-Tuning from Primary to Secondary

Here’s the most common question:

“Should I include existing images?”

Answer:

Yes. You must include them at a certain ratio.

Reason:

Since the model is not LoRA but a “memory patch,”

if you don't show existing concepts again, it will forget them.

Recommended ratio:

-

50% existing data

-

50% new data

This way, “memory retention + reflecting new styles” is the most stable.

8. Conclusion: Properly Preparing the Dataset Completes 70% of LoRA

The more I go through fine-tuning, the more I feel one thing:

Ultimately, the quality of LoRA is determined by the Dataset.

-

Resolution

-

Ratio

-

Lighting

-

Image consistency

-

Caption accuracy

-

Removal of poor images

-

Alignment of data.toml and dataset structure

If you take care of these 7 points, the settings like rank and learning rate become less important than you might think.

There are no comments.