Just a few years ago, saying "I bought a good graphics card (GPU)" meant "I'm going to play high-spec games" or "I've started video editing." The brain of a typical computer was always the CPU, while the GPU was merely a supporting device for displaying graphics beautifully.

However, with the advent of generative AI and deep learning, the landscape has completely changed. High-performance GPUs are now essential for running or training AI models on personal computers. This is why NVIDIA has become the world's number one company by market capitalization.

But why has the simple worker-like GPU taken center stage in the AI era, overshadowing the smart doctor-like CPU? The secret lies in the 'vectors' and 'matrices' we learned in high school math, along with the 'linear algebra' we studied in college.

1. Teaching Computers 'Apple' and 'Banana': Vectors

When we think, "an apple is red and round," or "a banana is yellow and long," how does a computer understand these concepts? Computers only know 0s and 1s, that is, numbers. Thus, computer scientists and mathematicians decided to convert all concepts of the world into a bundle of numbers, or vectors.

For example, let's assume we represent the characteristics of fruits as a 3-dimensional vector [color, shape, sweetness]. (Red=1, Yellow=10 / Round=1, Elongated=10)

-

Apple: $[1, 1, 8]$

-

Banana: $[10, 10, 9]$

-

Green Apple: $[2, 1, 7]$

When we turn data into vectors, something amazing happens. We can now calculate the "similarity".

The coordinates of the apple and green apple are close in space, while the banana is far away. The reason the AI can conclude, "Apples and green apples are similar!" is due to this distance calculation in vector space.

2. A Massive Cube Formed by Multiple Vectors: Tensors

However, the data that AI has to process is not just about three fruits.

Think of a color photo that is 1000 pixels wide and 1000 pixels tall. Since each pixel requires three numbers for R, G, and B, a single photo consists of a massive chunk of $1000 \times 1000 \times 3$ numbers.

-

Scalar: A single number (e.g., 5)

-

Vector: A one-dimensional array of numbers (e.g., [1, 2])

-

Matrix: A two-dimensional table of numbers (like an Excel sheet)

-

Tensor: A chunk of numbers in three dimensions or more (in cube form)

The reason Google named its AI framework 'TensorFlow' is right here. AI is a machine that constantly processes and computes these huge chunks of numbers (tensors).

3. The Essence of AI: Endless Multiplications and Additions (Matrix Operations)

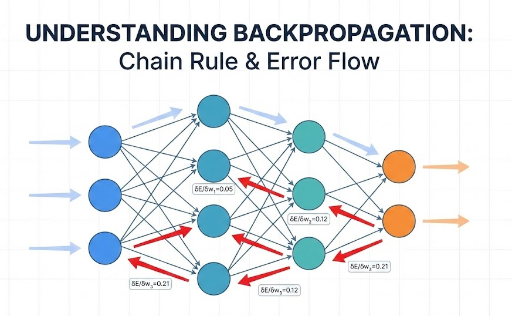

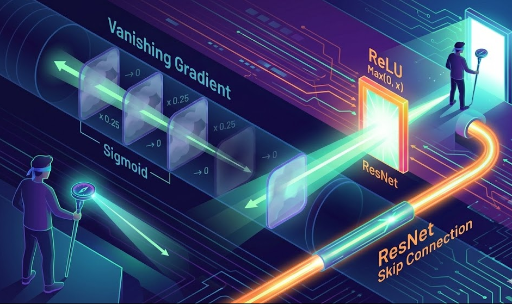

When we think of deep learning, we might assume it entails incredibly complex logical reasoning, but peering inside, it is nothing more than a repetition of simple, brute matrix multiplications.

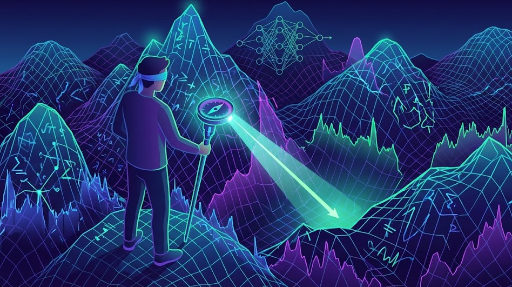

Mathematical formulas like $Y = WX + b$, which multiply input data (X) by weights (W) and add biases (b), are repeated millions or trillions of times.

The issue is not the complexity of these calculations but the 'quantity'.

-

CPU's Approach: "Okay, let’s calculate the first row... Finished? Then the second row..." (Sequential processing)

-

AI's Demand: "Do one hundred million multiplications at once!"

Here, the genius mathematician (CPU) gets flustered. No matter how smart they are, they only have one body and cannot do one hundred million tasks at once.

4. The Brush That Colored Pixels Becomes the Brain of AI

At this point, the savior that appeared is the GPU (Graphics Processing Unit). Originally, GPUs were designed to draw pictures on screens.

Think about your monitor. An FHD screen has around 2 million pixels. When playing a 3D game, the GPU must calculate for each of these 2 million pixels what color it should be and make adjustments like "it needs to be darker here for the shadow," all simultaneously.

-

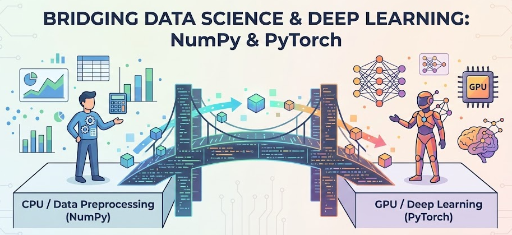

CPU: A few very smart cores (4–16 PhDs) → Optimized for complex logic and sequential tasks

-

GPU: Thousands of cores that can only perform simple calculations (5,000 elementary students) → Optimized for parallel processing of simple repetitive calculations

Developers realized something.

"Wait, whether it’s calculating pixel coordinates to color them simultaneously in 3D games or performing matrix multiplications simultaneously in AI deep learning... isn't it mathematically identical?"

The massive parallel processing capability of the GPU, which evolved for graphics processing, happened to (or inevitably) align perfectly with the matrix operations of deep learning. From the GPU's perspective, instead of rendering pixels on a screen, it is now distributing AI data, but the task (matrix operations) remains the same.

5. Conclusion: Math Classes in School Were Not in Vain

To summarize:

-

Computer scientists and mathematicians created information as vectors and tensors to understand the world mathematically (or to teach machines to understand it).

-

Processing these tensors requires linear algebra (matrix operations).

-

The GPU, which was already optimized for calculating thousands of pixels simultaneously, stepped in.

These three elements came together, making the current AI revolution possible.

Did you ever complain in high school, "What will I use vectors and scalars for?" and struggle through matrix multiplication in linear algebra in college? I remember not studying linear algebra during my freshman year because I found it incredibly boring and then getting poor grades, only to retake it in my junior year and recover my grades. I worked hard the second time, but it was equally boring compared to differential equations. Yet, surprisingly, that cumbersome math turned out to be the key to creating the smartest AI in human history two decades later, which gives me a touch of nostalgia.

Where the theories of mathematicians meet the technologies of hardware engineers, there lies the intersection of GPUs and AI.

🚀 Coming Up Next

Now that we understand the differences between CPUs and GPUs, what's this NPU (Neural Processing Unit) that frequently makes news? Let's explore what NPU, which claims to be even more optimized for AI than GPUs, is all about.

There are no comments.