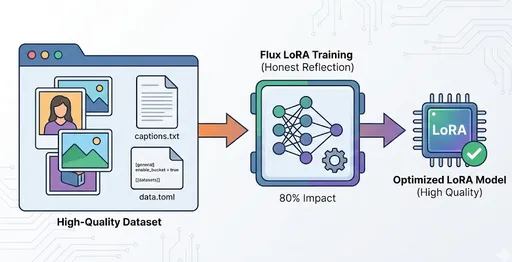

Recently, one of the most frequently asked questions among those utilizing image generation AI models, especially LoRA (Low-Rank Adaptation), is "What should we do with the previous data when adding new data for training (fine-tuning) the existing model?"

This inquiry offers fascinating insights akin to peering into the mechanisms of human learning and memory. Through a conversation I had with an acquaintance, I will detail the core principles and the optimal recipes for updating LoRA models.

1. Curiosities about LoRA Learning: Overwriting or Preserving?

The fundamental concern of the person who asked me was as follows.

Q. If I add 10 new images to an existing LoRA model created from 20 images and proceed with a second training, should I include the original 20 images in the second training? If not, won’t I completely forget the original characteristics?

This question requires a fundamental understanding of the way LoRA learns.

2. Principles of LoRA Learning: Statistical Update of Memory

To conclude, LoRA operates by continuously overwriting the 'statistical weights' rather than storing data as 'memory'.

Updating 'Statistics'

-

Initial Training (20 images): Generates a weight state reflective of the features of 20 images. This weight file numerically encapsulates characteristics such as style, faces, and poses of the 20 images.

-

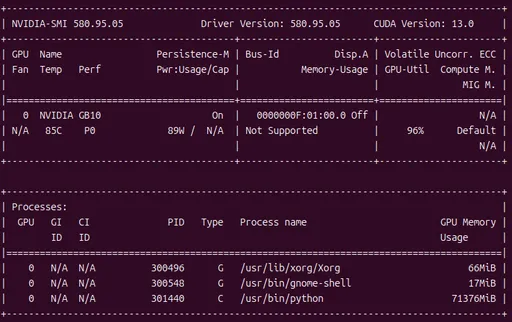

Second Training (adding only 10 images): Starts from the existing weight state and works to push and pull the weights toward the direction of the new 10 images.

At this point, the original 20 images are not stored in the file. Thus, if learning is done with only the 10 new images, the model will start to lean heavily towards the characteristics of those 10 images, and the features of the original 20 images will gradually fade.

💡 Key Point: If the original data is not included, it won't lead to an extreme loss of memory, but there is a high chance that existing characteristics will gradually become faint, overwhelmed by the statistics of the new data. Particularly, with a high Learning Rate (LR) set or a long duration of Steps, the speed of forgetting increases significantly.

3. Optimal Solution: A Balanced Review Strategy

If the goal is to maintain the consistency and basic mood of the original model while reinforcing new features, the safest and most orthodox approach is to mix the existing and new data and retrain all 30 images.

Standard Recipe: 20 Images + 10 Images = Retraining 30 Images

| Purpose | Data Composition | Learning Setup (Compared to Initial Training) | Effect |

|---|---|---|---|

| Maintain Existing + Fine-tuning | Existing 20 images + New 10 images | Set LR low (e.g., 1.0 $\rightarrow$ 0.3~0.5), Short Steps (100~300 Steps) | Fine-tune towards new data direction while retaining existing identity. |

| Increase New Proportion | Existing 20 images + New 10 images (Set num_repeats to double etc. only for new 10 images) |

Low LR, Short Steps | Modify weights to reflect new 10 images more quickly while maintaining existing characteristics. |

This method is akin to how humans review existing knowledge while learning new knowledge to strengthen long-term memory.

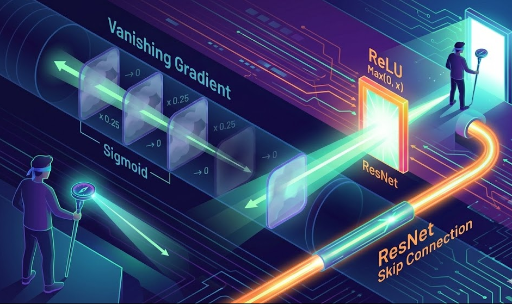

4. Machine Learning Phenomena Similar to Human Memory

This phenomenon is remarkably "similar to human learning". In fact, phenomena observed in deep learning evoke the mechanisms of human memory.

| Phenomenon (Machine Learning Term) | Similarity to Human Learning/Memory | LoRA Application Cases |

|---|---|---|

| Catastrophic Forgetting | Like forgetting an old password when only using a new one. | When trained solely on new data (10 images), the characteristics of the existing data (20 images) are rapidly forgotten. |

| Importance of Review | Long-term memory is enhanced by mixing reviews into study sessions. | To maintain and reinforce balanced features, training should mix existing 20 images with new 10 images. |

| Overfitting | A person who only memorizes exam problems and lacks flexibility in applying knowledge. | Training too long and hard on specific data reduces the ability to apply knowledge to other prompts. |

Ultimately, the experiences of forgetting, the need for review, and appropriate stopping points (Honey Spot) in LoRA fine-tuning relate closely to cognitive scientific considerations of "how to learn, how to forget, and how to review".

The deep learning models we use are inspired by the structure of the human brain, yet they are indeed mathematical implementations of statistical approximations. Nevertheless, the fact that this system exhibits phenomena akin to human learning is an interesting point that transcends engineering into philosophical realms.

5. Dealing with LoRA Means Balancing

Updating a LoRA model isn't simply about overwriting files.

It’s about understanding the statistical traces of previous data, adjusting the proportions of new data, and tuning the learning intensity (LR and Steps) to subtly adjust the model’s 'memory'. This process requires a sense of balance between maintaining the model's identity and completely transforming it with new features.

Next time you fine-tune a LoRA model, don't just input numbers; ponder "What impact will this have on the model's memory?" This intuition is what distinguishes true experts in handling the model as desired!

There are no comments.