After a long 8-hour wait, the training has finally completed.

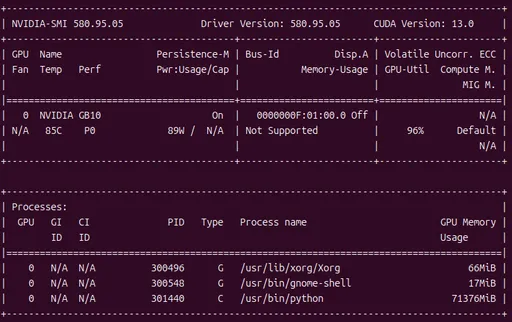

What were the results of fine-tuning the FLUX 1-dev 12B model on DGX Spark (ARM based) at a low power of 90 watts?

Today, I will compare and analyze the LoRA adapters generated at 250, 500, 750, and 1000 steps, and discuss what I've found in the 'Learning Honey Spot'.

1. Test Environment and Conditions

-

Base Model: FLUX 1-dev (12B)

-

Dataset: 40 images of real people (1024x1024)

-

Sampler: dpmpp_2m, euler

-

Hardware: DGX Spark (120GB Unified Memory)

-

LoRA Checkpoints: 250, 500, 750, 1000 Steps

2. Step-by-Step Result Comparison (The Battle of Steps)

I tested the consistency by generating realistic images featuring the trained individuals.

🥉 Step 250: "Who... are you?" (Underfitting)

-

Result: The target's facial features and vibe are similar, but if you ask, "Is this the same person?" you might tilt your head in confusion. There is a lack of subtle details in the features.

-

Speed: The generation speed was the fastest (less than 100 seconds), but the quality was not satisfactory.

-

Diagnosis: Underfitting. The model has not yet absorbed the characteristics of the data sufficiently.

🥈 Step 500: "Oh! It's you!" (Good Fit)

-

Result: It is clearly the learned individual. I finally felt that the LoRA was functioning properly.

-

Note: Occasionally, when varying the expressions, it looks like a different person, but overall, it exhibits excellent consistency.

-

Diagnosis: Entering the optimal learning range. Based on 40 images of data, this point seems usable for practical applications.

🥇 Step 750: "The Aesthetics of Stability" (Stable)

-

Result: There isn't much difference from 500 steps, but it feels a bit more stable. The individual's traits are well maintained across various poses.

-

Diagnosis: It is an extension of the 500 steps, and if anything, it feels 'matured'.

🏅 Step 1000: "Perfect, but... too perfect for its own good" (Overfitting Risk)

-

Result: 100% consistency. Even in blind tests, it is hard to distinguish it from the original due to its naturalness.

-

Issue: When requiring expressions not present in the training data, such as 'angry expression' or 'sexy expression', discrepancies in individual consistency occur.

-

Diagnosis: Border of Overfitting. The model has memorized the training data too well (“This person’s this expression”), which is starting to affect its generalization.

3. Technical Mysteries and Analysis

During the testing process, I discovered two interesting (and somewhat surprising) technical issues.

1) LoRA Files are 5GB?

All four generated LoRA file sizes were 5GB. Normally, LoRA adapters should typically range from dozens to hundreds of MB at most.

Analysis:

At first, I wondered, “Did the optimizer state or the entire text encoder get saved together?” However,

when attaching rank 256 LoRA to all layers of the FLUX 12B scale, the number of parameters in LoRA can expand to several GB.

That is, the common sense that 'LoRA is dozens to hundreds of MB’ based on SD1.5 cannot be applied directly.

In larger models, the rank and application range of LoRA are directly tied to the size, which is something I came to realize.

In the next iteration, I plan to lower the network_dim to the range of 64-128 to recalibrate the balance between capacity and performance.

2) Slower Generation Speed (97~130 seconds)

The generation time has increased compared to before applying LoRA.

Analysis:

-

Structural Reason: LoRA adds learned weights ($B \times A$) to the base weights ($W$), which increases the computational load.

-

Potential Bottleneck: Due to the design of the training script, T5XXL text encoder operates with caching, thus transferring to CPU. Therefore, I suspect that a similar CPU↔GPU round trip might occur during the inference pipeline.

However, this part has not yet been entirely confirmed at the code level, so in the next experiment, I plan to -

Force all TEs to be placed on CUDA

-

Change the caching strategy to see how generation speed and quality change.

4. Conclusion: Finding the 'Honey Spot'

The conclusion drawn from this 8-hour journey is clear. “More training is not always better.”

-

Optimal Range: Based on the 40-image dataset, the 'cost-effective' and 'quality' honey spot seems to be between 400-600 steps. Going to 1000 steps may not only be a waste of time but could also impair flexibility.

-

The Importance of Data: If only specific expressions are learned, it becomes a 'expression robot' that can only make that expression. Including a variety of angles and expressions in the dataset is much more crucial than increasing step numbers.

-

Possibility of DGX Spark: Although it took 8 hours and there were setup issues, the fact that a 12B model was fine-tuned at 90W power is encouraging.

Of course, this honey spot (400-600 step range) comes with quite specific conditions:

“40 images of data / FLUX 1-dev / this LoRA setting”.

However, based on this experience,

- exploring an appropriate range of steps proportional to the amount of data first

- and then cutting and saving more finely within that range to find the optimal point is what I learned.

5. Next Step

The goals for the next experiment have become clear.

-

Optimization: To investigate whether to force all text encoders (TEs) to CUDA, delving deep into whether this is a design intention of FLUX LoRA script (caching large T5XXL and transferring to CPU to save VRAM).

-

LoRA Diet: Comparing size/quality/consistency when reducing

network_dimfrom 256 → 128 → 64. -

Precision Strike: Not going up to 1000 steps, but finely dividing the 400-600 step range (Save every 50 steps) to find the best model.

-

Introducing Captions to the Training Dataset:

- Comparing 40 images of the same individual with captions added vs. without captions.

- Analyzing from the perspective of “expression/pose diversity vs. overfitting.”

Research into low-power, high-efficiency AI continues. I will return with the successful diet of LoRA in the next post!

There are no comments.