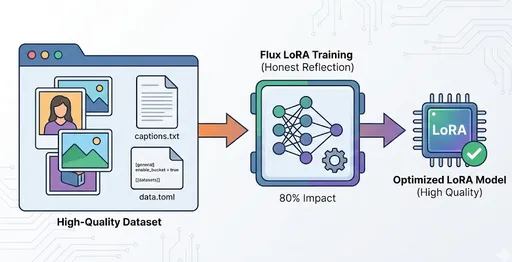

With the advent of the era of large language models (LLMs), the demand for 'fine-tuning' models with my own data has surged. However, training models with billions of parameters involves considerable costs and computational resources.

The core technology that has emerged to solve this problem is LoRA (Low-Rank Adaptation). Today, let's explore what LoRA is and why it is efficient, along with its technical principles.

1. What is LoRA (Low-Rank Adaptation)?

LoRA is a technique proposed by a Microsoft research team in 2021, which adapts models by learning only 'low-rank' two smaller matrices instead of updating all parameters of the large model.

While traditional 'Full Fine-tuning' required modifying all weights of the model, LoRA allows us to freeze the existing weights and learn only the minimal necessary part.

To illustrate:

When you need to modify the content of a thick textbook (the base model), it's similar to writing important corrections on a post-it (LoRA adapter) and attaching it to the book, rather than erasing and rewriting every page.

2. Operating Principle (Technical Deep Dive)

The core of LoRA lies in matrix decomposition.

Let $W$ be the weight matrix of a deep learning model. Fine-tuning is the process of learning the change in weights, denoted as $\Delta W$.

$$W_{new} = W + \Delta W$$

LoRA does not learn this $\Delta W$ as a large matrix but decomposes it into the product of two small low-rank matrices $A$ and $B$.

$$\Delta W = B \times A$$

If the original dimension is $d$ and the rank is $r$, rather than learning a huge $d \times d$ matrix, we only need to learn much smaller $d \times r$ and $r \times d$ matrices. (Typically, $r$ is much smaller than $d$, for example: $r=8, 16$, etc.)

As a result, the number of parameters that need to be learned can be reduced to 1/10,000 of the original.

3. Key Advantages of LoRA

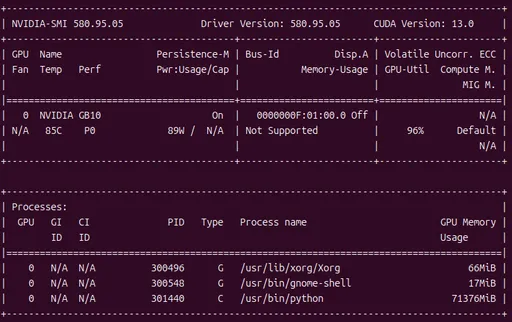

1) Overwhelming Memory Efficiency

Since there's no need to load all parameters into memory, fine-tuning large models can be conducted on standard consumer graphics cards (like RTX 3090, 4090), rather than expensive A100 GPUs. This dramatically reduces VRAM usage.

2) Storage Savings

Fully fine-tuned models can take up dozens of GB, whereas weights files (adapters) trained with LoRA typically range from a few MB to a couple of hundred MB. This allows for the operation of various services by simply swapping out several versions of LoRA adapters on one base model.

3) Performance Preservation

Despite the drastic reduction in the number of learning parameters, there is little to no degradation in performance compared to full fine-tuning.

4) No Inference Latency

After training, if we merge the values computed as $B \times A$ back into the original weights $W$, it structurally becomes the same as the original model, thus ensuring that inference speed does not decrease.

4. Real-World Applications

Suppose a user wants to create a chatbot that talks like a pirate.

-

Traditional Method: Train the entire Llama 3 (8B model) on pirate data. -> Produces over 16GB of output and takes a long training time.

-

LoRA Method: Fix the Llama 3 weights. Train only the LoRA adapter on pirate data. -> Creates an adapter file of about 50MB.

-

Deployment: Load the original Llama 3 model plus the 50MB file to provide the service.

5. Conclusion

LoRA is a groundbreaking technology that has significantly lowered the entry barrier for large language models. Now, individual developers and small enterprises can tune high-performance AI models using their specialized data.

If you are considering efficient AI model development, it makes sense to prioritize methods like LoRA (Parameter-Efficient Fine-Tuning) over unconditional full training.

There are no comments.