The term deep learning is now familiar, but delving into its complex principles can still feel daunting. Why choose TensorFlow among many options? First, it is an open source project developed by Google and is widely used around the world. What makes this framework special? TensorFlow represents more than just a tool; it is a system optimized for processing data, designing, and training deep learning models.

Basic Concepts and Structure of TensorFlow

The core concepts of TensorFlow are tensors and computation graphs. It sounded unfamiliar at first, but after running a few examples, I began to understand. A tensor is literally a unit that stores data. You can think of it like an array or a matrix. The computation graph defines what operations these tensors need to perform and in what order these operations should take place. The operation of TensorFlow is thus governed by this computation graph, through which tensors undergo operations to produce results.

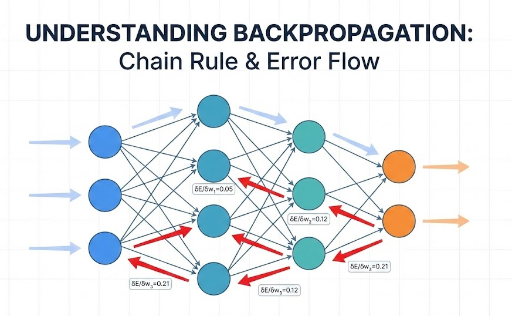

Through TensorFlow, I was able to better understand its principles by building a simple linear regression model. For instance, to learn a simple relationship like $ y = Wx + b $, I continuously adjusted the weights $ W $ and the bias $ b $ to fit the data. This process opened my eyes to the learning process of deep learning models. Although it may seem simple when expressed in formulas, even this straightforward model gets optimized to find the exact values through numerous calculations and data.

import tensorflow as tf

import numpy as np

x_data = np.array([1, 2, 3, 4, 5], dtype=np.float32)

y_data = np.array([2, 4, 6, 8, 10], dtype=np.float32)

W = tf.Variable(0.0)

b = tf.Variable(0.0)

optimizer = tf.optimizers.SGD(learning_rate=0.01)

for epoch in range(100):

with tf.GradientTape() as tape:

y_pred = W * x_data + b

loss = tf.reduce_mean(tf.square(y_pred - y_data))

gradients = tape.gradient(loss, [W, b])

optimizer.apply_gradients(zip(gradients, [W, b]))

This simple example helped me understand how the process of learning occurs. The model predicts values based on the data, and measures how much the predictions differ from the actual values (this is called loss). To minimize this difference, weights and biases are gradually adjusted to find the optimal values. TensorFlow efficiently handles the learning process by automatically managing the operations of the data and the model.

The Importance of Data

While creating models with TensorFlow, I realized that the key factor determining a model's performance lies in the quality of the data. No matter how good the model is, it cannot perform well with poor quality data. The process of analyzing and, if necessary, cleaning the data is crucial. Moreover, rather than simply using the data, understanding the data distribution and characteristics and preprocessing it so the model can learn effectively is important.

Another realization was that selecting the appropriate model based on the characteristics of the data is very important. A common mistake developers make is arbitrarily choosing a model without considering the data, which I did at first. For example, when classifying data, it is appropriate to use a classification model, while regression models should be used for predictive tasks. Conversely, if clustering is required, a clustering model that groups data based on similarities is suitable.

Criteria for Model Selection

TensorFlow is not just a library for implementing deep learning; it serves as a framework that supports the selection of models and the learning process. I learned that after analyzing the data and understanding the problem characteristics, it is crucial to select the right model. Choosing the right model based on the type of the problem is a very important skill for developers.

Linear regression is suitable when the value to be predicted is continuous, while a classification model is useful when dividing into multiple classes, like cats and dogs. For analyzing unlabeled data, a clustering model is appropriate. Understanding the types of these models and selecting the appropriate model will greatly assist in studying deep learning more deeply later on.

The Importance of Evaluation and Tuning

I also learned that merely selecting and training the model is not enough. Evaluation and tuning are necessary to enhance the model's performance. It’s important to learn various metrics and methods to evaluate how accurate the model is. For classification models, metrics like accuracy, precision, and recall are used, while regression models use mean squared error or mean absolute error. Additionally, tuning is required to optimize model performance by adjusting hyperparameters. For instance, one can observe performance changes by adjusting parameters like learning rate or batch size.

Through this process, I discovered that TensorFlow is a comprehensive tool that supports not only model design and training but also evaluation and optimization. Ultimately, to create a successful model in deep learning, all processes from data quality, model selection, evaluation, to tuning must harmonize.

Conclusion: The Harmony of Data and Models is the Key to Deep Learning

One of the greatest realizations I had while studying TensorFlow is that good models start from high-quality data. While creating models is important, deciding how to handle data may be even more crucial. Understanding the characteristics of the data, choosing a suitable model for the problem, and continuously improving the model throughout the learning and optimization process is indeed the essence of deep learning.

Now that I've taken my first steps into TensorFlow, I must continue to develop my skills in data analysis and model design. I aspire to become a developer who can gain insights from data and implement effective models based on them!

There are no comments.